In this tutorial, we will do some web scraping using Python and the library we will use is BeautifulSoup.

Web Scraping means obtaining information from the web. This can be both legal and illegal. Gathering data from other sites often infringes the policy and other data rights. There are ethical and legal concerns involved in Web Scraping. Do check out the laws in place before scraping any website (PRE-WARNING)

To know whether a website allows web scraping or not, you can look at the website’s “robots.txt” file. You can find this file by appending “/robots.txt” to the URL that you want to scrape. For this example, I am scraping the Flipkart website. So, to see the “robots.txt” file, the URL is www.flipkart.com/robots.txt.

But there is a piece of good news, many sites don’t mind if we scrap information from their sites. Why do we need to gather information? To put it simply the answer will be

- to analyze the data,

- gather website information faster

- to find hidden trends and details,

- to draw insights from the data

- to learn how web scraping works

- project work assignment and other reasons

Examples

- Web scraping from Social Media websites such as Twitter to find what is trending

- Details regarding job openings and interviews are collected from different websites and then listed in one place so that it is easily accessible to the user.

- web scraping to collect email IDs and then send bulk emails.

The Internet has all kinds of information relevant and related to several fields one can think of! You name it and you have the data for that (provided you search for that a little). So, now coming back to Web Scraping and how can is it done?

When I am using a big term like WEB SCRAPING there has to be some uniqueness in the description also, so here is it: Web scraping is an automated method to extract huge large data from websites. The data usually is in an unstructured format. Web scraping helps collect unstructured data and store it in a structured form.

Ways to Collect Data

- Application Programming Interfaces (APIs) that allow you to access their data in a predefined manner. With APIs, you can avoid parsing HTML and instead access the data directly using formats like JSON and XML. For example, Facebook has the Facebook Graph API which allows the retrieval of data posted on Facebook.

- Access the HTML of the webpage and extract useful information/data from it. This technique is called web scraping or web harvesting or web data extraction.HTML is primarily a way to visually present content to users.

Web Scraping in Python

Python with its powerful libraries allows easy scraping of data. Libraries used are

- Beautiful Soup 4

- Scrapy

- Requests

- Selenium

- lxml (HTML and XML parsing library)

How Web Scraping Works

While scraping the web, we write code that sends a request to the server that’s hosting the desired page. Generally, our code downloads that page’s source code, just as a browser would. But instead of displaying the page visually, it filters through the page looking for HTML elements that are specified, and extracts the contents we specify.

In this blog, I will use BeautifulSoup and will try to show to perform web scraping using the BeautifulSoup library of python.

NOTE: For better experience and understanding you can also watch the video tutorial.

https://youtu.be/jEhJgCih22g

BeautifulSoup

Beautiful Soup (bs4) is a parsing library that can use different parsers. A parser is simply a program that can extract data from HTML and XML documents. BeautifulSoup library is built on top of the HTML parsing libraries like html5lib, lxml, html.parser

Beautiful Soup’s default parser comes from Python’s standard library. It’s flexible and forgiving, but a little slow. But we can swap out its parser with a faster one if we need the speed.

One advantage of BS4 is its ability to automatically detect en-coding. This allows it to handle HTML documents with special characters. In addition, BS4 can help us to navigate a parsed document and find what we need. This makes it quick and painless to build common applications.

The website

The website used for performing web scraping can be found here. The National Weather Service website is available in the public domain, hence the data can be scraped!

https://forecast.weather.gov/MapClick.php?lat=37.7772&lon=-122.4168#.X9Nl2Ngza00

Before we proceed a little information about HTML is required, like understanding the tags, class. If you don’t understand that; not a problem (you can anyways learn it from the video link provided in the end).

Start with installing BeautifulSoup either in the Jupyter notebook directly or in Anaconda Command Prompt as shown below.

! pip install bs4 # command in Jupyter Notebook

conda install -c anaconda beautifulsoup4 # Anaconda Command Prompt installation

- The requests library will make a GET request to a web server, which will download the HTML contents of a given web page.

- BeautifulSoup will parse the document.

- To Understand the code below let us go to the website.

- Click on the Extended Forecast for,

- Now right-click on inspect.

- See that the Elements option is selected

- See the <div id> tag highlighted in blue

- Copy this tag

- Paste it in the console to find the parent Class

- class- tombstone-container

The div that contains the extended forecast items. If you click around on the console and explore the div, you’ll discover that each forecast item (like “Tonight”, “Thursday”, and “Thursday Night”) is contained in a div with the class tombstone-container.

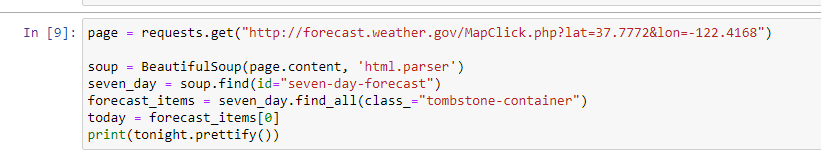

The Slideshow below will display snippets of the code followed by the output. The Explanation is as follows:

- Download the web page containing the forecast.

- Create a

BeautifulSoupclass to parse the page. - Find the

divwith idseven-day-forecast, and assign toseven_day - Inside

seven_day, find each individual forecast item. - Extract and print the first and second forecast item

Scrape Information from the Site

We’ll extract the name of the forecast item, the short description, and the temperature first, since they’re all similar:

As you can see, inside the forecast item today is all the information we want. There are 4 pieces of information we can extract:

- The name of the forecast item — in this case, today.

- The description of the conditions — this is stored in the title property of img

- A short description of the conditions — in this case, Partly Sunny then Rain Likely

- The temperature high — in this case, 57 degrees.

Scraping all the information from the Site

Now that we know how to extract each individual piece of information, we can combine our knowledge with css selectors and list comprehensions to extract everything at once.

In the below code, we:

- Select all items with the class

period-nameinside an item with the classtombstone-containerinseven_day. - Use a list comprehension to call the

get_textmethod on eachBeautifulSoupobject.

As you can see above, our technique gets us each of the period names, in order. We can apply the same technique to get the other 3 fields:

Convert the Scraped Data into Pandas Data Frame

Pandas will further help to analyze the data and draw conclusions based on the analysis.

Video Tutorial

For better understanding and clarity you can go through the practical demonstration of the complete tutorial.

Courses for Data Science

✔R course regular Link

https://www.udemy.com/course/r-programming-for-absolute-beginners-s/?referralCode=8D0E0096DB92503232F4

✔Power BI Master Class Link:

https://machinemantra.in/courses/power-bi-master-class-for-data-science/

✔Python Course Link

https://www.udemy.com/course/data-analysis-visualization-with-python/?referralCode=834CBC3B42CEB204D5E3

Sources

https://www.edureka.co/blog/web-scraping-with-python/

https://realpython.com/python-web-scraping-practical-introduction/

https://www.geeksforgeeks.org/implementing-web-scraping-python-beautiful-soup/

https://www.dataquest.io/blog/web-scraping-tutorial-python/